AI is everywhere in tech conversations. Some people hype it as magic while others dismiss it as overblown. The truth is simpler. AI is a tool. Like any tool in engineering, its value depends on how it is used.

Used carelessly, it produces garbage. Used well, it creates leverage.

I don't rely on AI to write my code for me. I use it to learn faster, refine ideas, and clear out repetitive work so I can focus on the decisions and systems that matter. When I am exploring a new library, I can ask for examples in context. When I hit a confusing error message, I paste it in and get possible root causes in seconds. When I am choosing between two approaches, I can compare tradeoffs without having to dig through endless blog posts or Stack Overflow threads.

Most engineers stop at asking AI for snippets of code. That misses half the benefit. I use it as a critique partner. If I draft an API design, I will ask what edge cases I might be missing. If I sketch out an architecture, I will ask where it might break under load. If I put together a plan, I will ask it to challenge my assumptions. The answers are not always right, but even when they are off they push me to sharpen my own thinking and see blind spots earlier.

Instead of fighting AI, we should be embracing it as a tool. Human progress has always followed this pattern. Every major leap came from adopting new inventions and finding ways to make them useful. Electricity transformed how we lived and worked. Automobiles and airplanes collapsed distances. Computers and the internet reshaped entire industries. In just the last 150 years we have advanced more than in the thousands of years before, precisely because we learned to harness these tools. AI is simply the next in that line. It is a turning point, and like every tool before it, it will get better the more we push it to meet our needs.

A good example came up when I was reviewing a design that involved processing jobs from a queue where reliability mattered. The system needed retries, but it also had to avoid hammering a downstream API. I already knew about exponential backoff, but I wanted to see if there were edge cases I was missing.

I asked AI to critique the design. It flagged that without jitter, simultaneous retries from many clients could create a thundering herd problem. It also suggested layering in a dead-letter queue for jobs that failed after multiple attempts. Neither idea was new to me, but having them surfaced in seconds let me validate my assumptions quickly and confirm the design before I moved ahead.

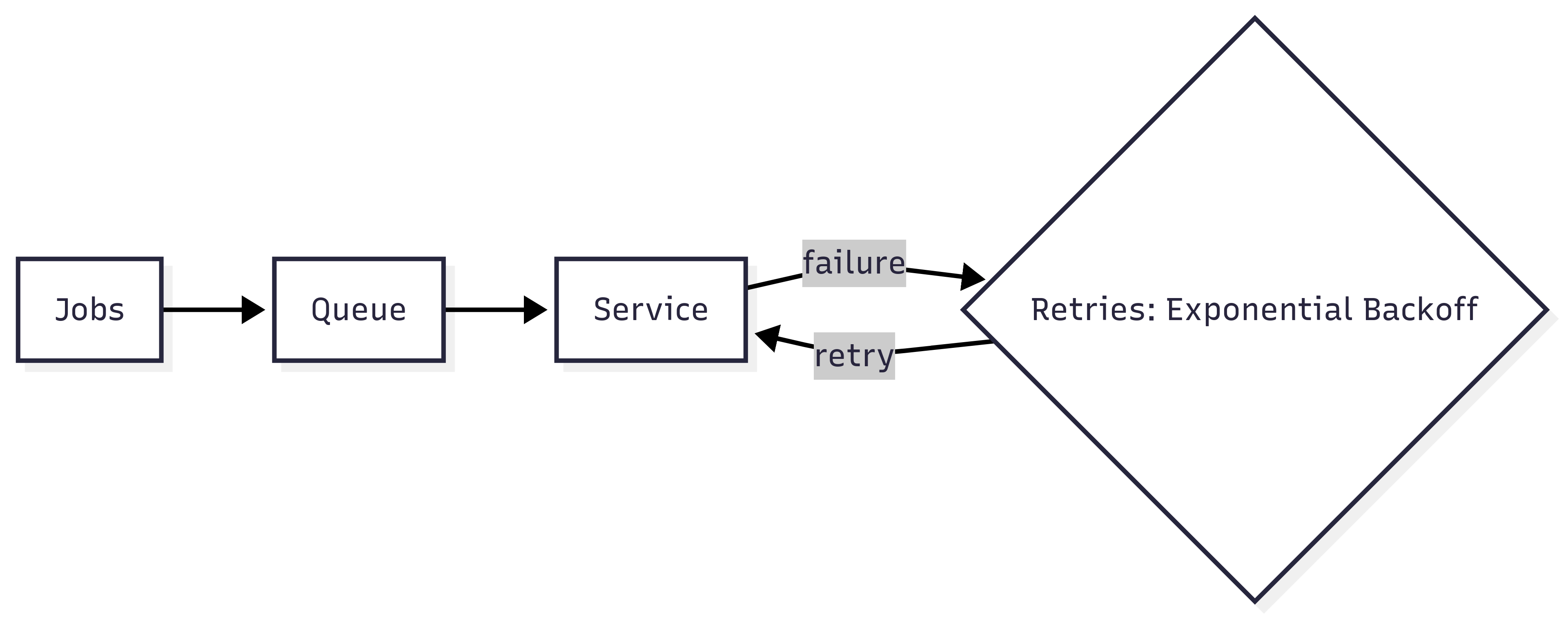

Initial design:

Jobs move into a queue and are retried with exponential backoff on failure.

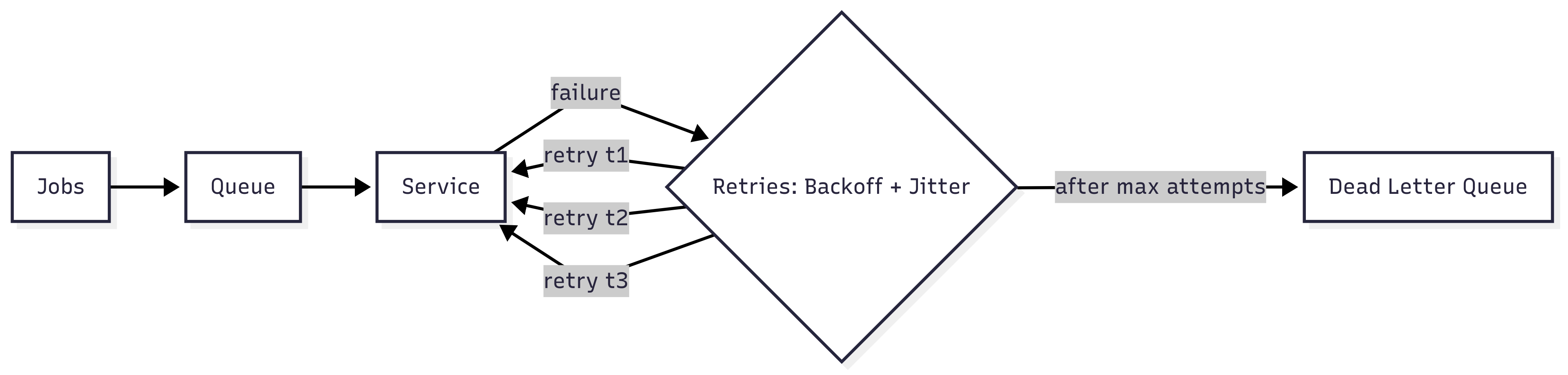

AI-suggested improvements:

Adding jitter reduces retry storms. A dead-letter queue catches jobs that fail after maximum attempts.

These weren't concepts I didn't know, but AI gave me a quick critique partner Instead of spending half an hour sanity-checking edge cases, I got feedback in seconds and could move ahead with confidence.

AI is also useful for clearing grunt work. Boilerplate code, simple tests, migration scripts, or even the first draft of a design document are all tasks it can handle quickly. What it cannot do is make judgment calls. If I don't understand what the model produced, I don't use it. That discipline makes the difference between outsourcing and acceleration.

There are also easy ways to get this wrong. If you paste AI-generated code into production without review, you will create problems later. If you use it as an excuse to stop learning, your skills will decay. If you rely on it to make critical design choices, you will end up with bloated and brittle systems nobody wants to own.

AI will not replace engineers in the near future, but an engineer using AI will.